In a world overflowing with data, wouldn't it be amazing to effortlessly extract the exact information you need from websites and online resources?. That's where web scraping comes in!

With web scrapping techniques we can extract any kind of data and store them into a format that we prefer.

The below article will provide an insight to webscrapping using python and selenium

Getting started

To get started with coding, follow these steps:

- Choose a Text Editor: Open up your desired text editor. Visual Studio Code (VS Code) is a popular choice.

- intsall python: make sure to install Python on your machine. You can download Python from the official website by clicking here.

Once Python is installed, you can verify its installation by opening a terminal or command prompt and typing:

python --version

SInstall selenium with pip:

With python installed use the pip package manager to install selenium by typing the command in the terminal.

pip install selenium

Setting up Webdriver

Since selenium interacts with websriver to scrap out data from website, therefore we will need to download it.

However the webdriver we use depends on the type of web browser we use. Since Chrome is the most prefered web browser we will provide a step wise guide on how set a web driver for chrome.

-

Download Chrome WebDriver::

Download the appropriate WebDriver version that matches your Chrome browser version by visiting https://sites.google.com/chromium.org/driver/

-

Extract WebDriver:

Once the WebDriver is downloaded, extract the ZIP file (if applicable) to a location on your computer.

-

Adding WebDriver to PATH:

Add the WebDriver to your system's PATH environment variable that allows you to run it from any directory without specifying the full path each time.

-

- the below guide will explain how to add web driver to system path variabls

On windows

- Right-click on the "This PC" or "My Computer" icon on your desktop and select "Properties."

- In the System Properties window, click on the "Advanced system settings" link on the left.

- In the System Properties dialog, click on the "Environment Variables" button at the bottom.

- Under the System Variables section, locate and select the "Path" variable, then click the "Edit" button.

- In the Edit Environment Variable window, click the "New" button and then add the full path to the directory where you extracted the WebDriver (e.g., C:\path\to\webdriver\directory).

- Click "OK" to close each window, and restart your command prompt or any open terminals for the changes to take effect.

on MAC

- open a terminal on your system.Depending on your shell (bash or zsh), open the corresponding profile configuration file in a text editor. For example, to open the .bashrc file, run:

nano ~/.bashrc

- Add the following line at the end of the file, replacing /path/to/driver with the actual path to your WebDriver directory:

export PATH=$PATH:/path/to/driver

save the file and restart the terminal again to apply the changes.

Writing the Web Scraping Script

Now that we have our environment set up, let's start writing the web scraping script using Python and Selenium. In this example, we'll scrape some basic information from a target website.

from selenium import webdriver

driver = webdriver.Chrome()

url = "https://www.example.com"

driver.get(url)

We use the "webdriver" tools from Selenium to control web browsers automatically. By running "webdriver.Chrome()", we launch a special Chrome browser that we control with Selenium. We store a website's address in a "url" variable to tell the browser where to go. Then, with "get()", we make the browser open the chosen website, just like typing an address in Chrome and hitting Enter.

# Extracting information from the website

element = driver.find_element_by_css_selector("css-selector") # Replace with the appropriate CSS selector

data = element.text

print(data)

How do you tell the browser exactly what you want? That's where CSS selectors come in. Think of them as unique tags for what you're interested in. In the code, "css-selector" is like the tag's spot. This helps Selenium find the exact part you're after on the webpage.

driver.quit()

After we're done with the scraping, it's important to clean up by closing the browser window and releasing associated resources. We do this using the quit() method of the WebDriver.

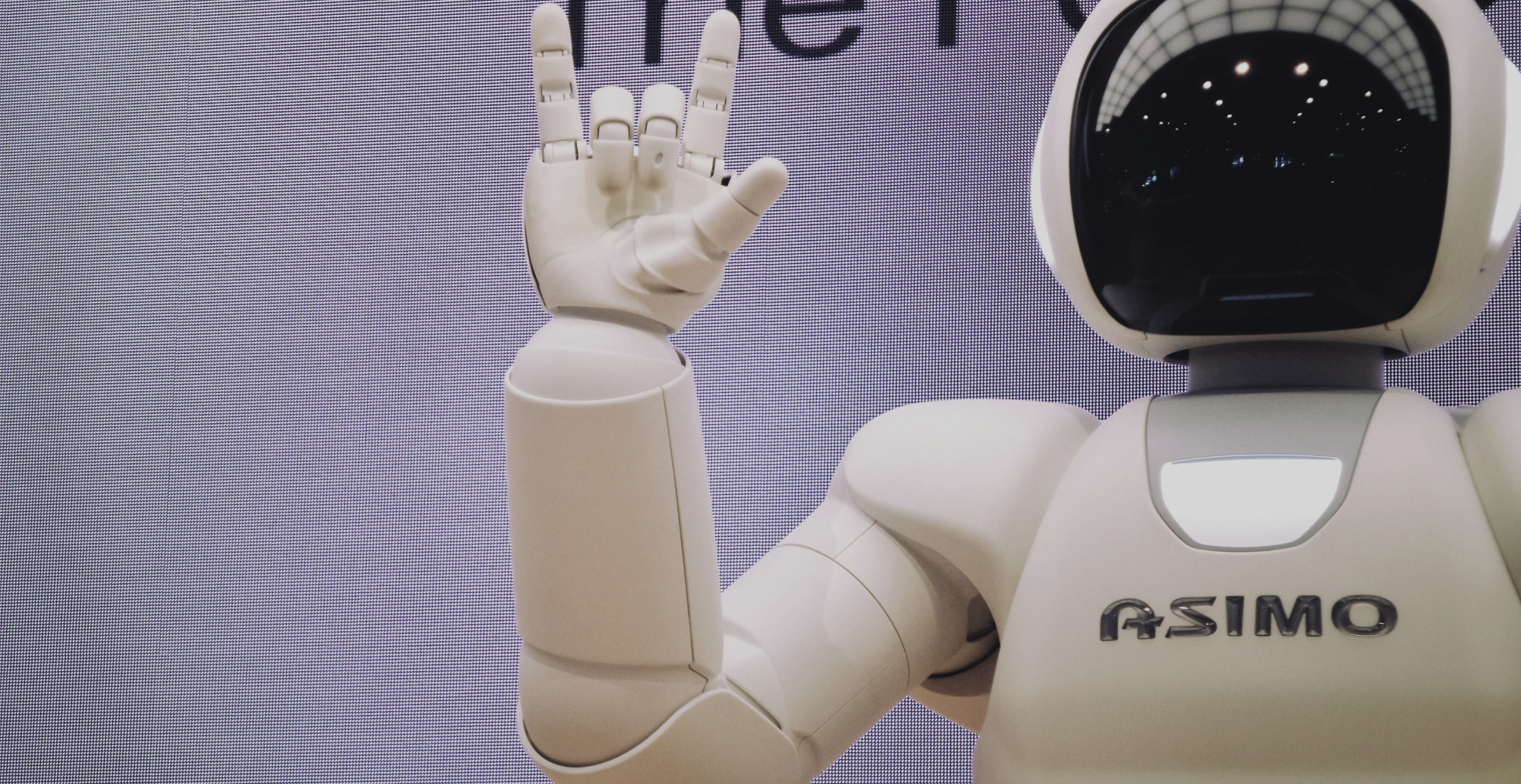

Example Caption

Example Caption

Let's consider a more complex example of scraping product information from an e-commerce website. In this example, we'll use Selenium to scrape the product names, prices, and links from a fictional online store.

from selenium import webdriver

# Initialize the Chrome WebDriver

driver = webdriver.Chrome()

# Open the target e-commerce website

url = "https://www.example-ecommerce-site.com" # Replace with the actual URL

driver.get(url)

# Find elements for products using CSS selector

product_elements = driver.find_elements_by_css_selector(".product")

# Create a list to store scraped data

products_data = []

# Extract product information and store in the list

for product_element in product_elements:

product = {}

# Extract product name

product["name"] = product_element.find_element_by_css_selector(".product-name").text

# Extract product price

product["price"] = product_element.find_element_by_css_selector(".product-price").text

# Extract product link

product["link"] = product_element.find_element_by_css_selector("a.product-link").get_attribute("href")

products_data.append(product)

# Print the scraped data

for product in products_data:

print("Product:", product["name"])

print("Price:", product["price"])

print("Link:", product["link"])

print("\n")

# Close the browser

driver.quit()

In this code, we're scrapping out information from a shopping website using of Selenium. First, we use "webdriver". With "webdriver.Chrome()" we initiate a browser window, setting our stage for discovery using Chrome.

Our journey takes us to an online store. Since every website has CSS selectors that we have used to target a specific part of the webpages, we pinpoint products on the page, capturing its name, price, and link. All this Data is kept safe in a variable called "products_data".

Finally, it's time for the big reveal! We share what we've found, showing the name, price, and link for every product. When our quest is done, we simply say "driver.quit()" to close the browser.

Remember, to embark on your own web journey, change the website link and use the right "CSS selectors" (".product-name", ".product-price", a.product-link") to match the website's magic language.

Conclusion

In this article, we have learned how to use Python as a tool to scrape desired information from websites using the various libraries provided by the Python package manager. With a clear understanding of web scraping techniques, we can extract data from online resources and use it for analysis. This opens a door to unlimited possibilities and opportunities with managing and utilizing data.

Example Caption

Example Caption